Linode-native location prediction Website

A website that can be used to predict the name of the location in an image using machine learning.

Introduction

The project that I have made for the LINODE and HASHNODE hackathon is a location prediction website, which can predict the name of a location in ASIA using an image. This website uses machine learning to predict the name of the location in the picture. Anyone without prior experience in cloud computing or Linode cloud services can easily deploy this application using terraform. This article covers the technologies used in the project and the workflow of how this application is made very easier to deploy to the cloud.

Demo Video🎞️

In the video, an image of the grand palace in Thailand is shown and is predicted by the machine learning model

How to use the website

The website is built on the basics of the following workflow,

- Go to the website and click the Upload button to upload an image that is to be predicted using the machine learning model.

- Click on the Predict button to make predictions on the location of the image. The prediction is done in the back-end and results are sent to the front-end.

- After a few seconds, a popup appears showing the name of the predicted locations for the given image.

Features

- Machine learning is implemented to predict the name of the location from images.

- Automated end-to-end website deployment using Terraform.

- Deploying as docker containers using StackScript and

docker-compose.yamlfile. - Completely responsive frontend.

Frontend

The frontend is a relatively simple single-page website created using React and is deployed to Linode

Note: All deployment information is explained in the deployment section

Tech Stack

The tech stack used for frontend are,

- React

- Typescript The project also used styled-components and material-ui for designing the components. I have also used normal CSS for styles in some parts of the project.

Backend

The backend is where machine learning takes place and also from which the app connects to the database. The backend is also hosted on Linode.

Tech Stack

The tech stack used for the backend is,

- Python

- Flask

Machine learning

Python's tensorlow package is used to perform machine learning on a pre-trained model. The images sent from the frontend are resized to match the specs required by the machine learning model using functions from the Pillow and numpy packages. The results generated by performing the machine learning model is an array of 98949 probabilities. The result with the highest probability will be the required location.

Database

The website uses a MongoDB database deployed from the Linode database cluster. The database has a total number of 98949 records representing the name of locations. The index of the result with the highest probabilities is matched with the id of the records in the database to find the location.

The backend uses utilities from the pymongo package to communicate with the MongoDB database.

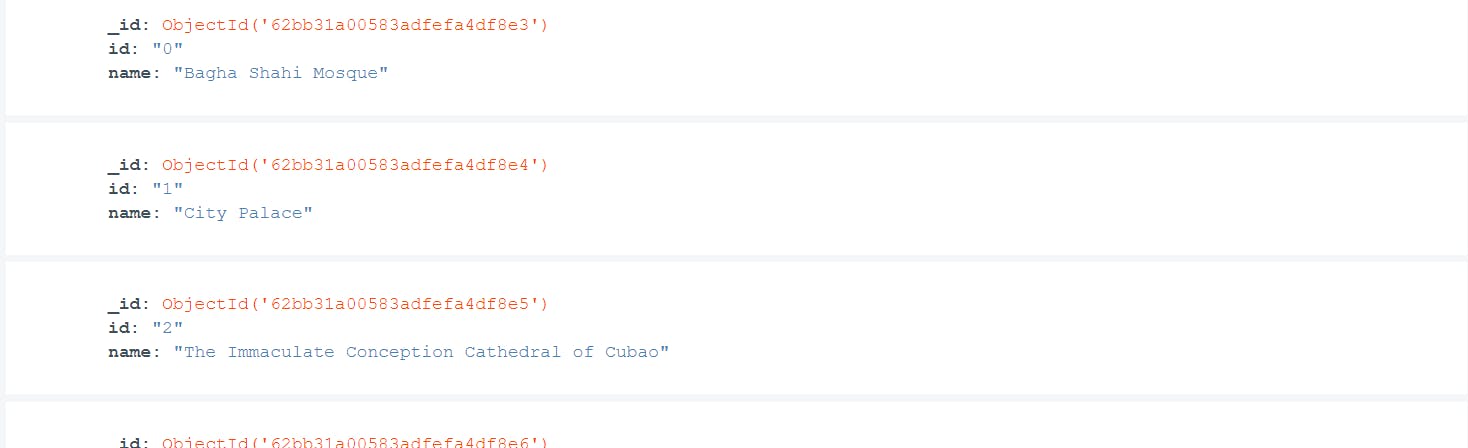

The record in database will be ,

Every record has an id, which will be matched with the index of the data with a high probability returned from the machine learning model to get the name of the location.

The records can be downloaded from the Github link shared in the bottom section.

Deployment

Terraform

All deployments are made using terraform directly from the command line. Terraform is a really powerful tool to provide infrastructure as code(IAAS) and automate the deployment of our resources and applications. A basic definition of terraform is a blueprint of our cloud environment. The main .tf is the base file for terraform. To modify the cloud environment, we must have a few dependencies from Linode to create an IAAS for our Linode environment. To do this, we must add the following lines of code in the main.tf file and run the command terraform init.

terraform {

required_version = ">= 0.15"

required_providers {

linode = {

source = "linode/linode"

version = "1.28.0"

}

}

}

`

We must first get our Personal Access Tokens from Linode to use terraform. You can follow this guide to get your token. Then add the following code to main.tf file,

provider "linode" {

token = var.linode_personal_token

}

Terraform has two files, terraform.tfvars and variables.tf associated together to maintain secrets. Add the token to terraform.tfvars,

linode_personal_token = "your token"

We must also specify this data in variables.tf as follows,

variable "linode_personal_token" {

sensitive = true

}

The sensitive option is used to indicate that the value should not be displayed in outputs. After setting this, we can start deploying our resources using terraform.

You can refer to the Github repository for the completed terraform code used to deploy this project

Deploying Linodes

The frontend and backend are deployed in the Linodes having the following properties,

- Image:

linode/ubuntu20.04 Type:

g6-nanode-1The Linode of type *g6-nanode-1

cost $5 per month and is the cheapest one out there. The instances are deployed in theap-west` region. To deploy a Linode, we will need a key and a password,- The keys can be generated using the

ssh-keygencommand - A strong password can be generated using the following command

python -c import secrets;print(secrets.token_urlsafe(32)).

- The keys can be generated using the

Deploying MongoDB database

The MongoDB database is a no-sql database used to store data in json format. The MongoDB cluster that has been deployed has the following properties,

- engine_id:

mongodb/4.4.10 - type :

g6-nanode-1 - allow-list :[array of IP addresses that can access the db]

- cluster_size: 1

The MongoDB database cost $15 per month. We can access a MongoDB database using the hostname, username, and the password given by Linode after deployment. I recommend using the MongoDB Compass to connect to the database. You can find how to do this here.

If you want MongoDB to perform replication, you can increase the cluster size to 2 or 3.

Then you can create a new collection and add the CSV file containing information of locations given in the Github repository.

Docker

This project uses Docker to easily deploy both the front and back end separately. Both the front and back end are dockerized with NGINX web server to make it accessible through the internet.

There are two main files called Dockerfile and a docker-compose.yaml.

The Dockerfile is used to build an image or represent the structure of the image and the docker-compose is used to run the docker image in containers.

StackScripts

StackScripts are shell scripts that run on a Linode machine after the machine is deployed. This project uses two stack scripts separately for the front and back ends. These stack scripts are used to deploy the web application in linodes, just after the Linode machine is created.

By using these StackScripts, we can deploy a web application very easily. The backend stackScript also sets environment variables, that are required to connect to the database. These variables are directly provided from the terraform.

Terraform is a powerful tool as we only need the access token from our Linode cloud account to create an entire ecosystem and deploy the web application. Everything from end to end is automated. This provides the perfect abstraction layer for people new to LINODE or any other cloud computing services. The command used to run a terraform file is terraform apply which will make changes to the cloud environment according to the code.

Links

- GITHUB link: Github Repository

- Live Website: something-amzing.live

Limitations

- This website can only be used to predict places in ASIA.

- The accuracy of prediction is not 100% and is depend on the image provided and the machine learning model

Future Ideas

- Implementing a machine learning model to predict places all over the world

- Associating this website with a full-scale tourism website

Conclusion

The project was built to showcase the power of IASS in Linode cloud services and how machine learning can be used to make innovative websites. Many amazing projects are built throughout the hackathon and I am happy to be a part of it. Thanks to Hashnode and Linode for this amazing hackathon.